Spring 2023 CS444

Extra Credit: Adversarial Attacks

Due date: Monday, May 8th, 11:59:59PM -- has to be done individually, no late days allowed!

The goal of this extra credit assignment is to implement adversarial attacks on pre-trained classifiers, as discussed in this lecture.

Basic attack methods (up to 45 points)

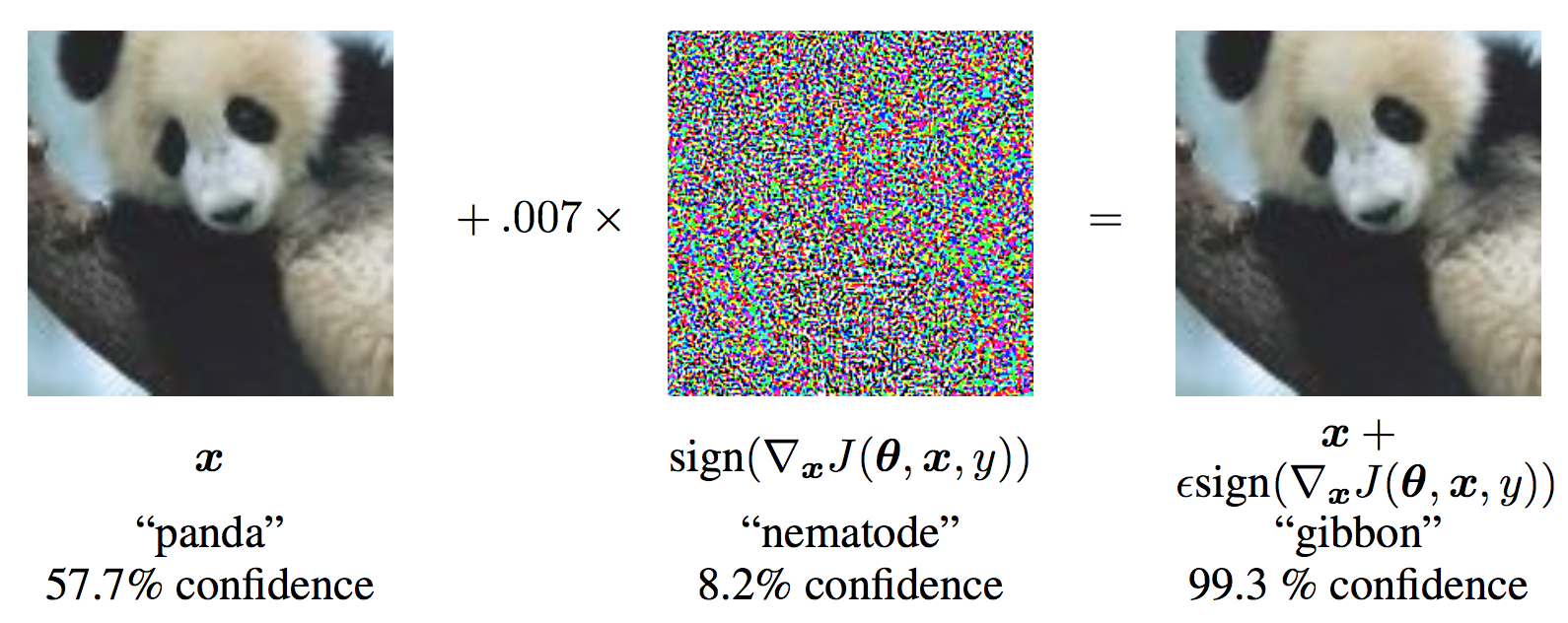

You should implement the Fast Gradient Sign (FGSM), Iterative Gradient Sign, and Least Likely Class Methods described in the above lecture and these papers. We also recommend you go through the PyTorch tutorial for FGSM. For full points, you should aim to produce your own version of the plots on slide 20 of the above lecture.

Rather than performing your attacks on the full ImageNet dataset, which is >10G of data, we recommend running on Imagenette, which is a small subset of ImageNet that contains only 10 classes. The description of the dataset can be found here. You can choose any pre-trained ImageNet model to attack. Note that these models are trained on the whole ImageNet dataset, which has 1000 labels. To attack specific labels in those models, just select the correct output of corresponding classes that appear in Imagenette. You may find this key helpful for selecting those classes.

Feel free to use the PyTorch tutorial code to get started. What you submit for your report is up to you, but the more explanations, experiments, visualizations, and analysis you provide, the more extra credit you will receive!

Anything else (up to 15 more points)

You may implement any other adversarial attack or defense method you want. Here is an incomplete list of suggestions:

Generating adversarial and preferred inputs

- Universal adversarial perturbations

- Adversarial attacks for problems beyond classification (detection, segmentation, image captioning, etc.)

- Generating preferred inputs: regularized gradient ascent starting with a random image to maximize the response of some class (see slides 30-33 of this lecture from two years ago, corresponding to this paper)

Defenses against your own implemented adversarial attacks

- A classifier attempting to detect adversarial examples

- Robust training (data augmentation with adversarial examples)

- Robust architectures (feature denoising)

- Preprocessing input images to attempt to disrupt adversarial perturbations (denoising, inpainting, etc.)

Robustness to non-adversarial distribution change

- Try to print out and re-photograph adversarial examples to see whether the resulting images still attack the original network

- Apply "real world image degradations" using ImageNet-C perturbations on ImageNette and compare accuracy with original

- Try the model on an ImageNet dataset generated entirely with StableDiffusion and compare accuracy with orignal ImageNette

How you choose to show your work is up to you! Just be thorough with explanations and visualizations and we will be flexible with grading. Roughly speaking, each substantial additional technique you implement, and present with sufficient documentation and analysis, will be worth around 5 points, up to a maximum of 15.

Report Template

Please use the report template to get started on the "basic attack methods" section. You are welcome to explore additional experiments, extensions, and analyses that are substanital and interest you in the "anything else" section.Submission Instructions

- All of your code (python files and any ipynb files) submitted in a single ZIP file. The filename should be netid_ec_code.zip.

- A brief report in PDF format (no template, submit your results however you like). The filename you submit should be netid_ec_report.pdf.

If you wish to receive extra credit, this assignment must be turned in on time -- no late days allowed!