CAREER: Similarity-Based Representation of Large-Scale Image Collections

(NSF Grant No. 1228082)

Students: Joseph Tighe (Ph.D. 2013), Yunchao Gong (Ph.D. 2014), Megha Pandey (M.S., 2011), Hongtao Huang (M.S., 2013), Mariyam Khalid (M.S. 2014),

Liwei Wang, Bryan Plummer, Cecilia Mauceri, Arun Mallya

Collaborators: Jan-Michael Frahm (UNC), Marc Niethammer (UNC), Maxim Raginsky (Duke and U of I),

Julia Hockenmaier (U of I), Florent Perronnin (Xerox Research Centre Europe), Albert Gordo (Xerox Research Centre Europe),

Sanjiv Kumar (Google), Qifa Ke (Microsoft Research)

This material is based upon work supported by the National Science Foundation under Grant No. 1228082.

Additional funding comes from NSF Grants No. 0916829

and 1302438, Microsoft Research, ARO, Xerox, Sloan Foundation, and DARPA.

Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Last updated: July 6, 2014

PROJECT GOALS

Intellectual merit: The goal of this project is to develop a representational framework that uses similarity to capture relationships in large-scale image collections. The representation is not restricted to any specific distance function, feature, or learning model. It includes new methods to combine multiple kernels based on different cues, learn fast kernel approximations, and improve indexing efficiency. In addition, new methods for nearest neighbor search and semi-supervised learning are proposed. Two major research problems addressed are: (1) defining and computing similarities between images in vast, expanding, repositories, and representing those similarities in an efficient manner so the right pairs can be retrieved on demand; and (2) developing a system that can learn and predict similarities with sparse supervisory information and constantly evolving data.

Broader impacts: The creation of visual representations and learning algorithms capable of handling large-scale

evolving multimodal data has the potential to revolutionize many scientific and consumer applications. Specific

application domains include field biology, automatic localization and navigation in indoor and outdoor

environments, personalized shopping and travel guides, automated assistants for the visually impaired, security

and surveillance.

PUBLICATIONS AND RESOURCES

|

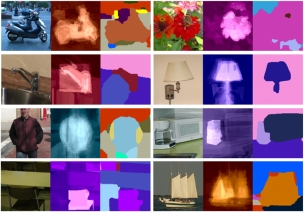

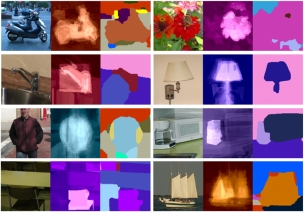

- Scene Parsing with Object Instances and Occlusion Ordering

J. Tighe, M. Niethammer, and S. Lazebnik, CVPR 2014

- Finding Things: Image Parsing with Regions and Per-Exemplar Detectors

J. Tighe and S. Lazebnik, CVPR 2013

- Understanding Scenes on Many Levels

J. Tighe and S. Lazebnik, ICCV 2011

-

SuperParsing: Scalable Nonparametric Image Parsing with Superpixels

J. Tighe and S. Lazebnik, IJCV 2013

|

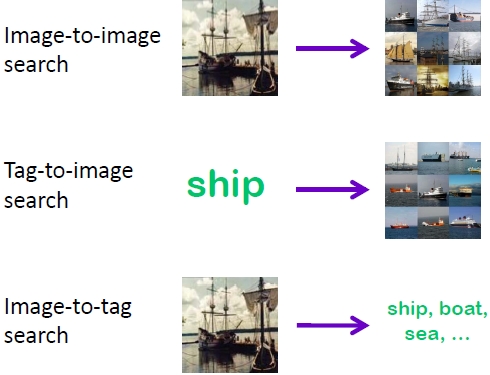

-

Improving Image-Sentence Embeddings Using Large Weakly Annotated Photo Collections

Y. Gong, L. Wang, M. Hodosh, J. Hockenmaier, and S. Lazebnik, ECCV 2014

-

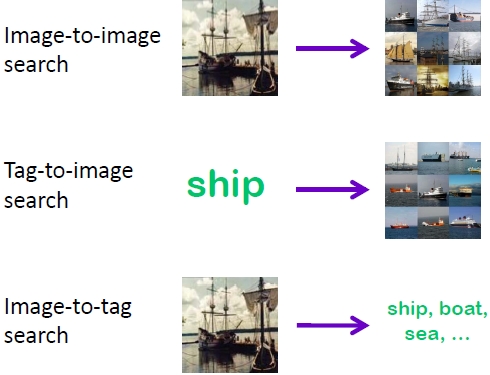

A Multi-View Embedding Space for Modeling Internet Images, Tags, and Their Semantics

Y. Gong, Q. Ke, M. Isard, and S. Lazebnik, IJCV 2014

|

Scene Representation

-

Multi-Scale Orderless Pooling of Deep Convolutional Activation Features

Y. Gong, L. Wang, R. Guo, and S. Lazebnik, ECCV 2014

-

Scene Recognition and Weakly Supervised Object Localization with Deformable Part-Based Models

M. Pandey and S. Lazebnik, ICCV 2011

Project webpage Project webpage

|

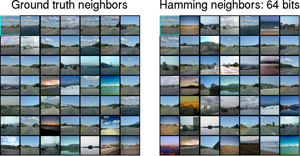

Large-Scale Similarity Search

-

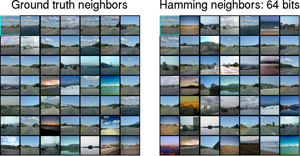

Learning Binary Codes for High-dimensional Data Using Bilinear Projections

Y. Gong, S. Kumar, H. Rowley, and S. Lazebnik, CVPR 2013

-

Angular Quantization-Based Binary Codes for Fast Similarity Search

Y. Gong, S. Kumar, V. Verma and S. Lazebnik, NIPS 2012

-

Asymmetric Distances for Binary Embeddings

A. Gordo, F. Perronnin, Y. Gong, and S. Lazebnik, PAMI 2014

-

Iterative Quantization: A Procrustean Approach to Learning Binary Codes

Y. Gong and S. Lazebnik, PAMI 2013

Project webpage (includes code and data) Project webpage (includes code and data)

-

Comparing Data-Dependent and Data-Independent Embeddings for Classification and Ranking of Internet Images

Y. Gong and S. Lazebnik, CVPR 2011

Project webpage (includes code and data) Project webpage (includes code and data)

-

Locality Sensitive Binary Codes from

Shift-Invariant Kernels

M. Raginsky and S. Lazebnik, NIPS 2009

|

|

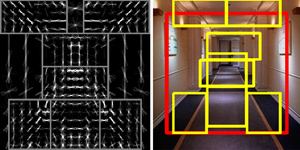

Landmark Photo Collections

-

Building Rome on a Cloudless Day

J.-M. Frahm, P. Georgel, D. Gallup, T. Johnson, R. Raguram, C. Wu, Y.-H. Jen, E. Dunn, B. Clipp, S. Lazebnik, and M. Pollefeys, ECCV 2010

Project webpage,

video, UNC spotlight Project webpage,

video, UNC spotlight

-

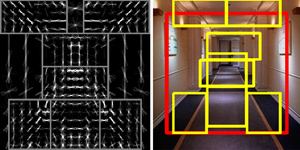

Modeling and Recognition of Landmark Image Collections Using Iconic Scene Graphs

R. Raguram, C. Wu, J.-M. Frahm, and S. Lazebnik, IJCV, 2011

Project webpage Project webpage

| | | |

Project webpage

Project webpage

Project webpage (includes code and data)

Project webpage (includes code and data) Project webpage (includes code and data)

Project webpage (includes code and data)

Project webpage,

video, UNC spotlight

Project webpage,

video, UNC spotlight Project webpage

Project webpage